What are vector databases and should you be using one?

Vector databases aren't a new concept, but they remain unfamiliar to many developers. They're being used in an increasing variety of applications, and have become an essential component of many projects, especially those involving novel AI applications.

Here, we investigate the nature of vector databases, exploring what they are, their architecture, their use cases and how to integrate them into your software development workflow, and provide you with guidance on how to use them in your own application, as well as best practices for working with them.

What is a vector?

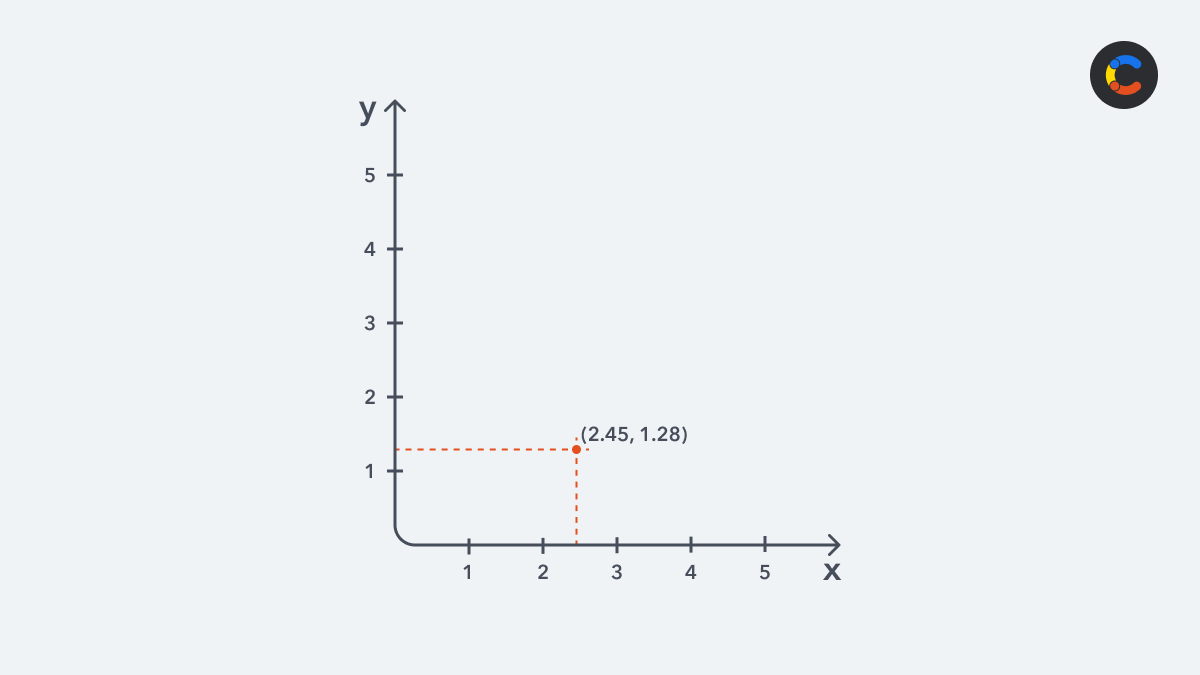

A vector is a dynamic array of numbers, and they are commonly used in math and physics to describe spatial coordinates. For example, the two-dimensional vector (2.45, 1.28) expresses the location of a point in two-dimensional space:

In machine learning, vectors are still a dynamic array of numbers, but each number describes a feature of a particular data point. For example, if you had a dataset about flowers that contained data about two different features (petal length and petal width), each flower could be represented as a two-dimensional vector:

ID | Petal length (in cm) | Petal width (in cm) | Vector |

|---|---|---|---|

FL1 | 2.5 | 1.6 | (2.5, 1.6) |

FL2 | 1.1 | 0.9 | (1.1, 0.9) |

FL3 | 2.7 | 0.4 | (2.7, 0.4) |

FL4 | 2.7 | 1.4 | (2.7, 1.4) |

FL5 | 0.9 | 1.3 | (0.9, 1.3) |

FL6 | 0.5 | 0.5 | (0.5, 0.5) |

FL7 | 1.8 | 0.9 | (1.8, 0.9) |

FL8 | 0.4 | 1.1 | (0.4, 1.1) |

Plotting these vectors on a graph allows us to see that the data points for flowers FL1 and FL4 are the closest, which means they're the most similar to each other.

As the number of dimensions becomes higher, it's harder for us to visualize which items in the dataset are similar based on their features. A two-dimensional vector is easy to plot, but in machine learning, vectors can have thousands or millions of dimensions. This is impossible for us to visualize, but luckily we have machines to do the work of estimating the similarity for us!

It's worth noting that a single vector always corresponds to one particular object — for example, a word, an image, a video, or a document. Once an embedding model has generated some vectors, they can be stored in a vector database, alongside the original content that they represent — such as a page URL, link to an image, or a product SKU.

In the context of machine learning, vectors are sometimes called vector embeddings — this just means a vector that was produced by an embedding model.

What is a vector database?

A vector database is a specialized type of database that stores the high-dimensional vectors that certain types of machine learning (ML) models generate. These models are collectively known as embedding models, and include natural language processing (NLP) models like ChatGPT as well as non-text-based models like those used for computer vision tasks. When it comes to NLP models, vector databases can help speed up work that involves things like similarity search and classification.

For these types of ML applications, the machine learning model needs to figure out which data is similar to other data. For example, in a scenario where an ML application is tasked with recommending products based on previous purchases, it would need to determine which products are similar to each other. In the below example, there are clusters for each of clothing, phones, and board games, but these clusters are located further apart as they are less similar to each other. Within the clothing category itself, similar items are closer to each other. For example, "black t-shirt" and "black polo shirt" are extremely close to each other (being the same color and shape), whereas "blue jeans" is further away.

Embedding models help us work out which objects are similar by converting very complex data (such as text, images, documents or videos) into vectors, which consist of numerical data. Given that we need to quantify which objects are similar, it really helps to have numerical data for solving this problem. Converting data into vectors is a way of simplifying data, while still retaining the most important features of it.

What are vector databases used for?

Vector databases solve specific AI/ML problems

Although vector databases are highly optimized for certain ML problems, they are designed for a very specific use case and are not suited for storing non-vectors. You don't want to switch out your relational database with a vector database if you're storing standard relational or document-based data!

For many ML purposes, it's common to use both vector databases and relational databases at the same time for different parts of your application. For example, you might use a relational database to store transactional data and a vector database for handling AI/ML queries within the same application.

Common use cases for vector databases

Vector databases are appropriate when you need to operationalize an ML model in your coding project, as vectors are the fastest way to work out which objects are similar to each other, and vector databases are the most efficient way to store vectors.

Some common use cases in this category include:

Content discovery: Improving the algorithms behind how your users engage with your content will massively improve the user experience of your site. NewEgg, a store that sells PC components, uses ML to power its Custom PC Builder — its own “AI expert to help you build.” You can give it a prompt like "I want to build a PC that can play Red Dead Redemption 2 for under $700" and it will present you with some suggested combinations of components.

Recommendation systems: Vector databases power the ML models behind the recommendation systems of big companies like Netflix and Amazon. The vectors are used to determine which programs or products are similar to the ones that other users like you have watched or purchased based on data including genre, release date, popularity, and starring actors (Netflix) or product category, price, purchase history, or keywords (Amazon). But as ML vectors can have thousands of features, some of these can't be so easily described or even understood by humans. It turns out that machines are better at working out which features are the most relevant, even if we can't understand them.

Chatbots and generative AI: These take natural language as their input, which is complex unstructured data. Converting this into vector data means it can be stored numerically, which makes it easier to simplify using principal component analysis. The most intelligent chatbots out there all make use of vectors, including ChatGPT, which uses Azure Cosmos DB for vector similarity search.

What are the benefits of using vector databases for ML problems?

We've already mentioned that vectors are the fastest way to do classification and similarity searches when dealing with very large datasets containing high-dimensional data. They’re much faster than alternatives like graph databases (which are better for dealing with low-dimensional highly-related data, like suggesting new friends in a social network or detecting fraud by analyzing relationships between people and businesses).

High-dimensional data is data with a very large number of features (you can picture this as being like a relational database with a very large number of columns), even if the sample size itself (number of rows) isn't very large.

And while it's technically possible to work with vectors without the use of vector databases (for example, by storing them in a relational database, NoSQL database, flat files (like CSV or JSON), or in-memory data structures) the benefits of using a vector database are so great that you'll never need to use the alternatives provided you're dealing with high-dimensional data.

Let's first look at the benefits of a proper database (of any kind) vs. flat files or in-memory data structures like Python arrays:

Full CRUD capabilities: If you work with real-time systems and need to regularly update your vectors, you'll need to use a database, as they are designed to have efficient and reliable CRUD (create, read, update, and delete) operations. Updating data in flat files means rewriting parts of the file, which is inefficient, and storing data in an in-memory data structure could lead to disastrous data loss if the system runs out of memory.

Sharding and replication: These come as part of any standard database. Sharding (partitioning your data across multiple nodes) means that your queries will be faster, as you can parallelize your read and write operations. Setting up database replication will give your system better fault tolerance as your data will be distributed across nodes.

Built-in access control: Databases make it easy to use multi-tenancy to partition data on a per-customer basis.

More reliable backup and recovery: Database backups allow you to preserve the consistency of all transactions so you never lose any data, and when you need to recover your data you can roll back to an exact point in time.

Finally, let's look at why a vector database in particular is the best type of database for ML problems.

A vector database's index is specialized for organizing vectors for high-dimensional similarity search, whereas relational databases and NoSQL databases have completely different indexing systems.

Vector databases store vector metadata, which can be used to filter out irrelevant data. A query to a vector database will therefore only be run against a relevant subset of the entire dataset, saving a lot of time.

The architecture of a vector database

Storage

Alongside vector embeddings, a vector database stores the original data that each vector represents, along with metadata associated with the vectors, such as IDs, timestamps, or tags, which help give more context for filtering your queries.

Indexes

An index is a data structure that organizes the vector embeddings in a way that will optimize the similarity search algorithm within a vector database. Just like with relational databases, an index helps speed up database queries; however, the data structure of a vector database's index is quite different to that of a relational database.

This is because a query to a vector database doesn't return specific results — instead it returns results with close similarity. The vector database's index is used to quickly identify the subset of vectors that are closest to a particular query vector, and this significantly reduces the number of computations that would be required to find the similar matches.

The structure of your index will vary depending on which algorithm you used to map your vector data to it. Some examples of common indexing algorithms include product quantization, locality-sensitive hashing, and hierarchical navigable small world.

Search algorithms

The key to a vector database's utility is the search algorithms that are included with it. These algorithms facilitate the similarity search queries that are sent to it, and are used for data retrieval.

During a search query, a vector database is queried to find out what vectors are similar to a particular vector — known as the "query vector." Query vectors are usually generated by the embedding model. For example, if an ML application wants to know which products are similar to a black polo shirt, it passes "back polo shirt" through the embedding model to generate a vector embedding that represents "black polo shirt." That vector embedding becomes the query vector that is sent to the vector database to check for similar vectors.

Vector databases usually default to using an approximate nearest neighbor (ANN) algorithm to determine which vectors are most similar, as it has a good balance between accuracy and speed. It's also possible to switch to other algorithms if more accuracy is required, such as k-nearest neighbors (k-NN) or even a hybrid of the two.

How to integrate a vector database into your AI development workflow

Research the best vector database for your needs

Popular examples include Milvus, QDrant, Pinecone, and Weaviate, but you'll need to consider which one best suits your requirements, while considering performance, scalability, ease of use, community support, and integration with other technologies.

Convert your data into vectors using an embedding model

You need an ML model that produces vectors or you won't have anything to store in your vector database! BERT is a well-known NLP model that produces vector embeddings, and VGG-16 is an example of a computer vision model that converts images into vectors.

Ingest your data into your vector DB so that it can be queried

You need to pass all your original data through your embedding model to transform it into vector embeddings, and then store them in the vector database.

In this example of data being stored in a vector database, some natural language data about products is passed through an embedding model and converted into vector embeddings, which are then stored in the vector database.

Each vector database solution has different APIs, so the method for ingesting your data will be different. This guide shows an example of how it can be done using the Pinecone vector database.

Integrate your vector database queries into your existing workflow

This also involves working with a specific vector database's API, so the precise way to do this will depend on the database system. Here’s an example of how to query Weaviate's vector database using their JavaScript SDK, to show one example of how this can work. In the Weaviate example, the function returns movie recommendations based on similarities to whatever is in the "text" field (which is the query).

Following on from the previous example where product-related data was stored as vector embeddings, the query now asks the model to find something similar to a black polo shirt. The vector embedding for a black polo shirt is compared with the vectors in the database and the most similar vector is found, which is the vector that represents a black t-shirt. Both the vector and the reference to the black t-shirt are returned to the application.

Best practices when using vector databases

These tips and best practices will help speed up and improve the accuracy of your queries, and may even help with storage and cost efficiency, or with scaling your application:

Data pre-processing: You need to cleanse your data, i.e., convert it into the right format for your embedding model.

Choose an appropriate indexing method: Decide on a trade-off between speed and accuracy, but also consider the cost of each method and how much memory each requires.

Implement principal component analysis: Speed up your queries by reducing the dimensionality of your vectors.

Compare different search algorithms: This is generally a trade-off between ANN and k-NN. You should also try tweaking the parameters to balance speed and accuracy to best suit your needs.

Batch queries together: This will save money and also usually save time overall.

Use a distributed vector database: This will allow for better scaling as your data grows.

Use query caching: This will speed up results for common queries.

ML and vector databases help you engage your users with relevant content

The best way to improve content discovery is to use a machine learning model (such as NLP backed by a vector database) to provide your users with relevant content.

Based on your AI-powered content recommendations, you can piece together reusable chunks of text, images, video, and other content, and compose it all into auto-generated articles, scrollable feeds, and product listings that provide relevant, endless engagement to your users.

To get started with composable content, sign up for a free Contentful account, and start seeing the benefits up close.